We’ve all walked into a clothes store before to be asked, often right on walking in, if we were alright and whether we were looking for anything in particular. Depending on your own viewpoint you may or may not find this helpful but it’s certainly valuable to the store owner who can use the information to make sure customers find what they want. Imagine now, you were the store owner and the shopping centre has requested you don’t approach customers unless they are specifically forthcoming and request help. Suddenly, you’d have lost the ability to proactively help customers find what they want or highlight your best products – and take a “one size fits all” approach. You’d also lose crucial feedback on which types of customers do and don’t find what they are after.

Unfortunately, the reality is that many websites are facing this dilemma with Google encrypting search queries. As most websites have no human interaction with their visitors, the search query used in a search engine is one of the few clues a web business can use to understand their visitors. Although the accountability of SEO (although controversially, not PPC since encryption only accounts for organic visits) will almost certainly take a hit, the percentage of traffic filtered out could potentially get fairly high before straightforward projections for many keywords wouldn’t be feasible.

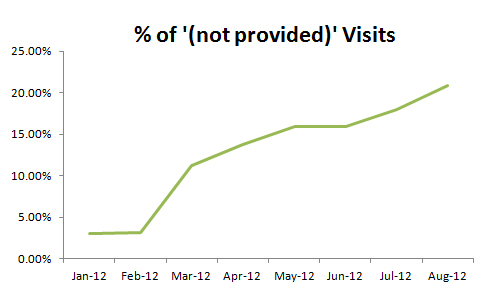

Unfortunately, many other side effects aside from lack of keyword data will become problematic with even a small to moderate percentage of the traffic filtered out. This is a real issue because our own client data shows that even more searches are being encrypted as Google gets more traction with Google accounts, and encryption rates broke the 20% mark last month.

Customised Experiences

Customised Experiences

Behavioural targeting is not an especially new concept, but it’s probably still an industry very much in its infancy – something that encryption of search queries is likely dealing a huge blow. Without knowing what the customer was initially looking for, the website will need to rely on later user interactions, like a login, to provide a personalised experience.

A retailer that wants to display certain related products to users who search with a given brand intent, wouldn’t be able to do this for these users now. The problem would extend to many other clever attempts at customisation – the b2b company who has a client case study on their homepage would have to wait for the user to visit another case study before they could attempt to guess which industry or service type case study a user is visited in.

This all means that users receive a less relevant experience, and the brand loses their ability to put its best foot forward.

Weaker Data Capture

A customised site experience (or lack of) might be one issue, but no doubt a much bigger issue for a larger share of websites would be the lack of an opportunity to capture more data about the customer which can be used in the future.

Many websites capture the keyword and other details about the traffic source and add it as a hidden form field when the customer makes a sale / enquiry.

Understanding the initial intent of a customer who has bought a product / subscribed to a newsletter or requested a form can say a lot about that customer and really help with the relationship in the long term. It can also provide a vital clue in the short term about what the customer might be looking for before the first interaction.

Managing without the data

Ultimately there’s little that can probably be done to reverse the tide, as Google’s concerns about privacy are being driven by far bigger forces than the search marketing industry.

In the short term, agencies or in-house staff can use projections to state how much traffic they believe was sent via each keyword (most people I know who are doing this are using a simple projection – i.e. if 60% of the traffic that was recorded came through example keywords, then 60% of the ‘not provided’ would probably also have come via example keywords too.)

Better attempts to project traffic involve doing the above at a landing page level, but that involves quite advanced spreadsheets, and is still only a ‘best guess’ – long tail keywords will never be accounted for properly in such projections.

Furthermore, many companies like to know, to the penny, what each campaign is contributing, and what isn’t known just won’t do for those reports.

Finally, as already highlighted above, the traffic source is useful not just to analyse trends, but on an individual customer level also. Brands may look to capture the landing page going forward (or, even better, the full navigation path) but this requires closer attention to be useful.